Introduction

Enterprises talk endlessly about being data-driven yet decisions are often powered by information that is already outdated by the time it reaches a dashboard. Real-time data intelligence promises something better—signals as they happen, not after the moment has passed. Web scraping quietly sits at the center of this shift, pulling fresh information from the open web at enterprise scale. There is a certain irony here: the most valuable insights often live in plain sight, just scattered, unstructured, and moving fast. This is where modern organizations begin to rethink how intelligence is gathered and used.

What Is Real-Time Data Intelligence?

Real-time data intelligence refers to the ability to collect, process, and act on information the moment it becomes available. Unlike batch reports that summarize the past, this approach focuses on what is happening right now. Enterprises use it to detect market shifts, operational risks, or customer behavior changes as they unfold. There is often a moment of realization—usually after missing an opportunity—when leaders recognize that timing matters as much as accuracy. Fresh data shortens feedback loops, sharpens strategy, and reduces reliance on assumptions that may no longer reflect reality.

Role of Web Scraping in Enterprise Data Pipelines

Web scraping functions as a bridge between the external world and internal decision systems. Public websites, marketplaces, forums, and news sources generate massive volumes of valuable signals every minute. When integrated correctly, web scraping services transform that scattered information into structured inputs for analytics and automation tools. This approach complements APIs, which are often limited or delayed. There is a practical elegance to it: instead of waiting for curated feeds, enterprises observe markets directly. That direct line of sight frequently reveals patterns competitors do not see until much later.

How Real-Time Data Extraction Works in Practice

Real-time data extraction begins with identifying high-value sources and defining what “fresh” truly means for the business. Automated scrapers then collect updates continuously, handling layout changes and traffic spikes along the way. The raw output is cleaned, normalized, and streamed into dashboards, alerts, or machine-learning systems. Somewhere between the extraction and the insight, complexity tends to appear—unexpected formats, missing fields, sudden blocks. This is also where well-designed pipelines shine, quietly turning chaos into clarity without slowing the flow of information.

Enterprise Use Cases for Real-Time Web Scraping

Across industries, real-time scraping powers decisions that cannot wait. Market intelligence teams monitor competitor pricing and product launches as they happen. Sales organizations enrich leads the moment prospects appear online. Risk and compliance teams track regulatory updates and breaking news before issues escalate. Each use case shares a common thread: speed reduces uncertainty. There is often a subtle shift in mindset once teams experience this—planning becomes more dynamic, reactions more confident, and meetings shorter. Data stops being a historical artifact and starts behaving like a living signal.

Benefits of Web Scraping for Enterprises

The most obvious benefit is speed, but the deeper value lies in relevance. Real-time web data reflects current conditions, not averaged trends from weeks ago. Enterprises gain sharper forecasts, faster responses, and more accurate automation. Operational costs drop as manual research fades into the background. When supported by reliable web scraping services, data teams spend less time chasing updates and more time interpreting them. The result is not just better dashboards, but better conversations—ones grounded in what is happening now rather than what already happened.

Challenges & Considerations

Real-time scraping is powerful, but it is not effortless. Websites change structures, introduce defenses, or publish data inconsistently. Legal and ethical boundaries require careful attention, especially at scale. Infrastructure must handle constant data flow without breaking downstream systems. Many organizations learn this the hard way—usually after a once-reliable source silently fails. These challenges do not negate the value; they simply demand discipline. Thoughtful design, monitoring, and governance turn potential friction into manageable operational details rather than recurring emergencies.

Best Practices for Real-Time Scraping at Scale

Successful enterprise scraping starts with compliance and transparency, respecting site rules and regional regulations. Technically, resilient systems use rotation, headless browsers, and continuous monitoring to adapt to change. Data quality checks matter just as much as extraction speed. When real-time data extraction feeds analytics or AI models, small errors can scale quickly. Mature teams treat scraping like any other mission-critical system—documented, observable, and continuously improved. That mindset often marks the difference between a fragile experiment and a dependable intelligence engine.

Build vs. Buy: Choosing the Right Approach

Some enterprises prefer building internal scraping platforms for maximum control. Others choose managed solutions to accelerate deployment and reduce maintenance. The tradeoff usually comes down to time, expertise, and tolerance for operational complexity. There is a familiar pattern here: building feels empowering until maintenance consumes more resources than expected. Buying feels expensive until the hidden costs of downtime appear. The most effective decision aligns with long-term strategy rather than short-term convenience, balancing ownership with reliability.

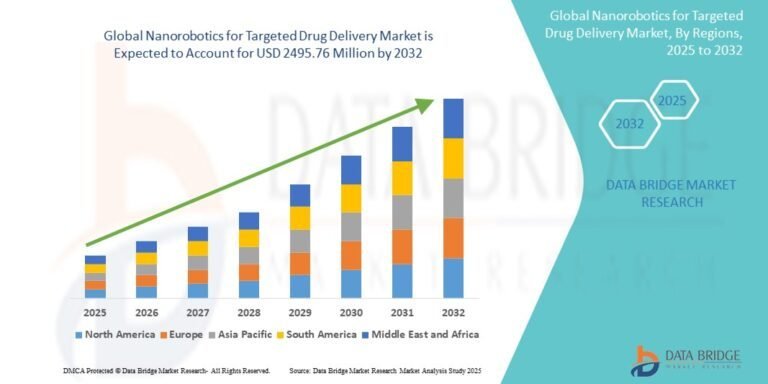

Future of Real-Time Data Intelligence

The future points toward automation layered on automation. AI-driven scrapers will adapt to changes without manual intervention. Predictive models will act on signals before humans notice them. Decision systems will move closer to autonomy, especially in pricing, risk, and supply chain management. Amid this progress, one idea remains constant: data loses value as it ages. Enterprises that embrace real-time intelligence will not just move faster—they will think differently, treating information as a continuously evolving asset rather than a static report.

Conclusion

Web scraping has evolved from a technical tactic into a strategic capability for enterprises seeking real-time insight. When combined with disciplined processes and clear objectives, it turns the open web into a living data source. The shift is subtle but profound: decisions become timely, strategies become adaptive, and surprises become rarer. In a landscape where conditions change by the hour, intelligence that arrives late is barely intelligence at all. The organizations that recognize this tend to stay one step ahead—quietly, consistently, and with far fewer regrets.

FAQs

What is web scraping in enterprise data intelligence?

Web scraping in enterprise data intelligence involves automatically collecting publicly available information from websites and converting it into structured data. This data is then analyzed to support strategic decisions, monitor markets, and identify trends in near real time. It allows organizations to observe external signals directly rather than relying solely on delayed reports or limited third-party feeds.

Is real-time web scraping legal for businesses?

Real-time web scraping is generally legal when it involves publicly accessible data and respects website terms, robots.txt policies, and regional data regulations. Enterprises must also avoid collecting personal or protected information. Legal review and ethical guidelines are essential to ensure scraping activities remain compliant and sustainable.

How often should real-time data be updated?

The update frequency depends on business goals and data volatility. Some use cases require minute-by-minute updates, while others benefit from hourly or daily refresh cycles. The key is aligning update speed with decision-making needs so systems remain responsive without overwhelming infrastructure.

Which industries benefit most from real-time data intelligence?

Industries such as e-commerce, finance, logistics, travel, and media gain significant value from real-time intelligence. These sectors operate in fast-changing environments where timely insights directly affect pricing, risk management, customer engagement, and operational efficiency.

Can scraped data integrate with analytics and AI tools?

Yes, scraped data is commonly integrated into business intelligence platforms, data warehouses, and AI models. When properly cleaned and structured, it enhances forecasting, automation, and predictive analytics, enabling smarter and faster enterprise decision-making.