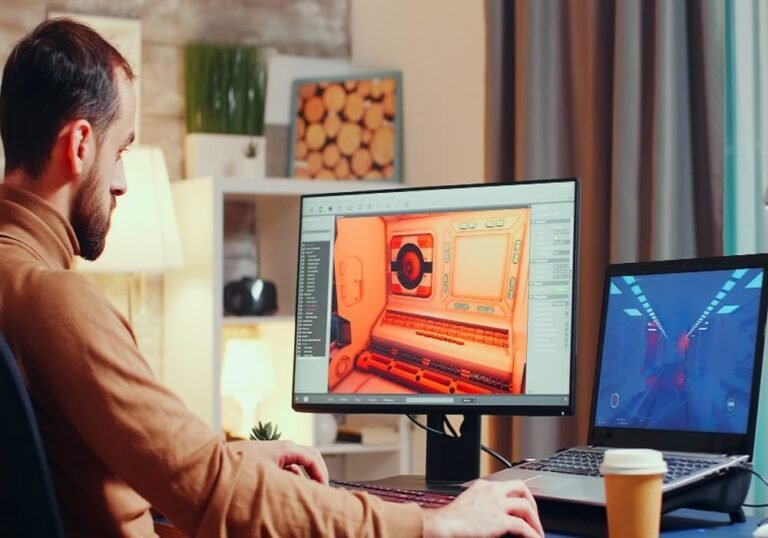

Computer vision has become a core component of modern artificial intelligence systems, powering applications such as autonomous vehicles, facial recognition, healthcare diagnostics, smart surveillance, and retail analytics. At the heart of all these systems lies one crucial process: Video annotation. Without accurately annotated visual data, even the most advanced algorithms struggle to perform reliably in real-world scenarios.

In this blog, we’ll explore the most widely used video annotation techniques in computer vision, explain how they work, and understand why choosing the right image and video annotation method is critical for building high-performing AI models.

What Is Video Annotation in Computer Vision?

Video annotation is the process of labeling objects, actions, or events across video frames to make raw visual data understandable for machine learning models. Unlike static images, videos involve temporal continuity, making annotation more complex and resource-intensive.

Through image and video annotation, AI systems learn to recognize objects, track movement, interpret behavior, and analyze visual patterns over time. This structured data enables machines to “see” and interpret the world with accuracy.

Why Video Annotation Techniques Matter

Not all video data is the same, and neither are annotation requirements. Selecting the wrong annotation technique can lead to:

Poor model accuracy

Inconsistent predictions

Increased training costs

Limited scalability

Different computer vision tasks demand different annotation strategies. Let’s break down the most important video annotation techniques used in computer vision today.

1. Bounding Box Annotation

Bounding box annotation is one of the most commonly used video annotation techniques. It involves drawing rectangular boxes around objects of interest in each video frame.

Use cases:

Object detection

Traffic monitoring

Retail analytics

Sports analytics

Bounding boxes are particularly effective when identifying and localizing objects such as vehicles, pedestrians, or products. In video annotation, these boxes are often linked across frames to track object movement over time.

2. Semantic Segmentation

Semantic segmentation assigns a class label to every pixel in a video frame. Unlike bounding boxes, this technique provides pixel-level precision.

Use cases:

Medical imaging

Scene understanding

Autonomous driving

Through image and video annotation using semantic segmentation, AI models can differentiate between roads, sidewalks, vehicles, and pedestrians with high accuracy. This technique is computationally intensive but offers exceptional detail.

3. Instance Segmentation

Instance segmentation goes a step further than semantic segmentation by distinguishing between individual objects within the same class.

Example:

Two people walking side by side are labeled as separate entities, even though they belong to the same category.

Use cases:

Crowd analysis

Robotics

Advanced object interaction

This video annotation technique is especially valuable in environments where multiple objects overlap or interact frequently.

4. Polygon Annotation

Polygon annotation allows annotators to draw precise shapes around objects rather than using rectangular boxes. This results in tighter and more accurate labeling.

Use cases:

Irregular-shaped objects

Manufacturing quality inspection

Medical imaging

Polygon-based video annotation improves data quality and reduces background noise, which directly enhances model performance.

5. Keypoint and Landmark Annotation

Keypoint annotation focuses on labeling specific points on an object, such as joints, facial landmarks, or skeletal structures.

Use cases:

Human pose estimation

Gesture recognition

Facial analysis

This technique is heavily used in projects involving face image datasets, where facial landmarks like eyes, nose, and mouth are annotated across video frames to train recognition and expression analysis models.

6. Object Tracking Annotation

Object tracking is a critical component of video annotation that involves assigning a unique ID to objects and tracking them consistently across frames.

Use cases:

Surveillance systems

Autonomous vehicles

Sports performance analysis

Tracking helps AI systems understand object behavior, movement patterns, and interactions over time, making it a foundational technique in computer vision.

7. Action and Event Annotation

Action annotation labels specific activities or events occurring within a video.

Examples:

Walking, running, sitting

Picking up objects

Suspicious behavior detection

This form of image and video annotation is essential for video understanding models used in security, healthcare monitoring, and smart city applications.

8. 3D Cuboid Annotation

3D cuboid annotation adds depth information by labeling objects in three-dimensional space within video frames.

Use cases:

Autonomous driving

Robotics navigation

Warehouse automation

This advanced video annotation technique enables models to understand spatial relationships, distances, and object orientation.

Choosing the Right Video Annotation Technique

Selecting the appropriate annotation method depends on:

Project goals

Industry use case

Model architecture

Required accuracy level

For example, autonomous driving systems often rely on a combination of bounding boxes, semantic segmentation, and object tracking, while healthcare applications may prioritize pixel-level precision.

Challenges in Video Annotation

Despite its importance, video annotation presents several challenges:

High time and cost investment

Consistency across frames

Managing large datasets

Maintaining annotation accuracy

This is why structured workflows and experienced annotation teams are crucial for delivering scalable image and video annotation solutions.

The Role of Video Annotation in AI Model Performance

High-quality video annotation directly impacts:

Model accuracy

Training efficiency

Real-world reliability

Poor annotation leads to biased or unreliable models, while well-annotated data accelerates development and improves deployment success.

Final Thoughts

As computer vision continues to evolve, video annotation remains a foundational pillar of AI development. From bounding boxes and segmentation to keypoints and action labeling, each annotation technique plays a specific role in helping machines interpret visual data accurately.

Understanding and applying the right image and video annotation techniques ensures that AI models are not only intelligent but also dependable, scalable, and ready for real-world challenges.

Whether you’re building autonomous systems, healthcare solutions, or facial recognition tools, investing in the right video annotation strategy is essential for long-term success.