In the race to build smarter AI systems, algorithms often steal the spotlight. New architectures, advanced neural networks, and improved optimization techniques are announced almost every month. Yet, despite all this innovation, many AI projects still fail to deliver accurate, reliable results. The reason is surprisingly simple: even the most sophisticated algorithm cannot outperform the quality of the data it learns from. When it comes to computer vision, high-quality visual training data matters far more than the algorithm itself.

The Foundation of Every Computer Vision Model

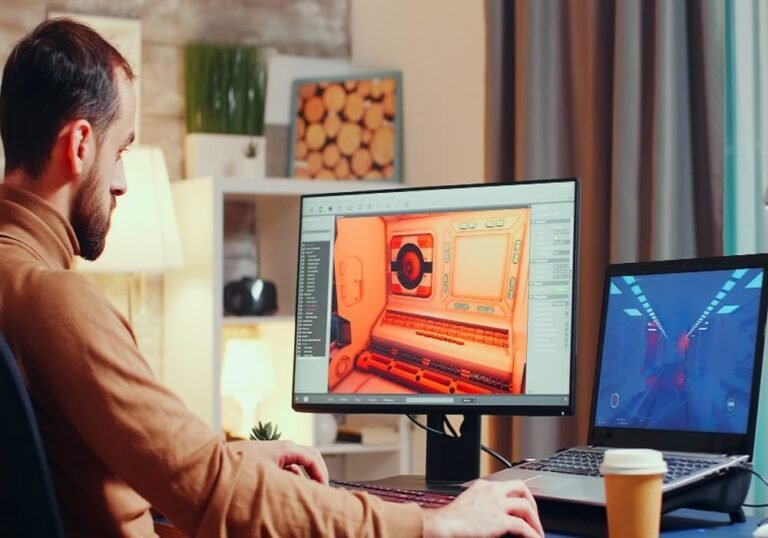

At its core, computer vision is about teaching machines to see and understand the world. This understanding comes from examples. Images and videos show the model what objects look like, how faces differ, and how actions unfold over time. If these examples are unclear, inconsistent, or poorly labeled, the model learns incorrect patterns. No amount of algorithmic tuning can fully compensate for flawed visual data.

This is where structured image and video annotation becomes critical. Accurate labeling ensures that models receive clear signals about what they are seeing, helping them generalize better when exposed to real-world scenarios.

Why Algorithms Alone Are Not Enough

Algorithms define how learning happens, but data defines what is learned. A powerful model trained on noisy or biased data will simply learn those flaws faster. For example, if facial images are poorly annotated or lack diversity, the model may perform well in limited conditions but fail in real-world deployments.

In contrast, a simpler algorithm trained on carefully curated and accurately labeled data often delivers stronger and more stable results. This is why many successful AI teams invest heavily in data preparation before experimenting with complex model architectures.

The Impact of Annotation Quality on Model Accuracy

High-quality annotation directly influences how well a model understands visual information. Clear boundaries, consistent labels, and contextual accuracy help models distinguish between similar objects and subtle variations. In facial recognition and analysis tasks, well-prepared face image datasets play a crucial role. Small errors in labeling facial features or identities can lead to misclassification, bias, or security risks.

Consistency across large datasets is equally important. When visual data is annotated using standardized guidelines, models learn patterns more effectively and produce reliable predictions across different environments.

Understanding Motion and Context Through Video Data

Images capture moments, but videos capture behavior. For applications like surveillance, autonomous driving, or activity recognition, understanding motion and sequence is essential. This is where video annotation adds significant value. Frame-by-frame labeling allows models to learn how objects move, interact, and change over time.

Without properly annotated video data, models may struggle to interpret actions or predict outcomes. High-quality temporal annotations provide context that algorithms alone cannot infer, regardless of their complexity.

Reducing Bias Through Better Visual Data

Bias in AI systems often originates from imbalanced or poorly curated datasets. When certain demographics, environments, or conditions are underrepresented, models produce skewed results. Improving visual training data quality helps address this issue at its source.

By ensuring diversity, accuracy, and fairness in data collection and annotation, AI systems become more inclusive and dependable. This is especially important for applications involving facial analysis, where ethical and regulatory expectations are increasingly strict.

Scalability Depends on Data, Not Just Code

As AI systems scale, data challenges grow faster than algorithmic ones. Managing millions of images or thousands of video hours requires robust workflows, quality checks, and review processes. Image and video annotation at scale must balance speed with precision to avoid degrading data quality over time.

Teams that prioritize scalable data pipelines often see better long-term results than those focused solely on refining models. Clean, well-organized datasets make retraining, fine-tuning, and expansion far more efficient.

Real-World Performance Starts with Real-World Data

Laboratory results can be misleading. Models may perform exceptionally well on test datasets but fail when exposed to real-world variability. High-quality visual training data bridges this gap by reflecting real conditions, lighting variations, angles, and behaviors.

When datasets are realistic and accurately labeled, models adapt better to new inputs. This leads to improved deployment outcomes, fewer errors, and higher trust in AI-driven systems.

Conclusion

While algorithms continue to evolve, data remains the true backbone of computer vision. High-quality visual training data defines how well an AI system understands the world, adapts to change, and performs in real environments. Investing in accurate annotation, diverse datasets, and strong quality control delivers returns that no algorithm upgrade can match.