In today’s digital landscape, visual content reigns supreme. Images and videos dominate social media feeds, websites, and marketing campaigns. But with the sheer volume of user-generated content being shared every second, ensuring that this material aligns with community guidelines is more crucial than ever. This is where visual content moderation steps in.

Visual content moderation isn’t just a buzzword; it’s an essential practice for brands aiming to create safe online spaces. From identifying explicit imagery to filtering out harmful messages, effective moderation can protect your brand’s reputation while fostering positive engagement among users.

As technology evolves, so do the methods available for analyzing images and videos. Traditional techniques have served their purpose well but may not be enough to tackle today’s challenges head-on. Advanced techniques are emerging to enhance accuracy and efficiency in moderating visual content, making them indispensable tools for businesses.

Let’s dive deeper into what visual content moderation entails and explore both traditional and cutting-edge approaches that can elevate your strategy in today’s dynamic digital world.

What is Visual Content Moderation?

Visual content moderation is the process of reviewing and managing images and videos shared on online platforms. It ensures that user-generated content adheres to established guidelines, promoting a safe digital environment.

This practice involves identifying inappropriate or harmful visuals, such as explicit material, hate speech, or graphic violence. Moderators assess each piece of content against community standards before it becomes visible to other users.

Automation often plays a key role in visual moderation. Advanced algorithms can quickly scan large volumes of data. However, human oversight remains vital for nuanced judgment calls that technology may miss.

In essence, visual content moderation protects brands while enhancing user experience across various platforms. It’s about maintaining quality and trust within an ever-evolving digital landscape where visuals speak volumes.

The Importance of Visual Content Moderation

Visual content is everywhere. From social media platforms to e-commerce websites, images and videos play a crucial role in user engagement.

However, not all visual content is appropriate or safe. Inappropriate images can harm brand reputation and create negative experiences for users. This underlines the need for effective moderation.

Moderation ensures that only suitable visuals are displayed. It protects communities from harmful imagery while fostering a positive environment.

Moreover, visual content can impact SEO rankings. Search engines favor sites with properly moderated content, improving visibility online.

By implementing robust moderation strategies, businesses not only comply with legal standards but also build trust among their audience. Users feel safer knowing that the platform actively maintains quality control over what they see.

In today’s digital landscape, taking visual content seriously makes all the difference in creating an enjoyable user experience.

Traditional Techniques for Image and Video Analysis

Traditional techniques for image and video analysis often rely on manual processes. Human moderators review content to identify inappropriate visuals, such as violence or explicit material. This method can be effective but is time-consuming.

Another common approach involves rule-based systems. These systems use predefined algorithms to filter out unwanted content based on specific criteria. While they automate some tasks, they can struggle with nuanced imagery.

Image recognition technology has also been a staple in traditional moderation efforts. Simple algorithms detect certain patterns or colors in images, flagging them for further review. However, this method may miss context or subtleties that only a human eye can catch.

Video analysis typically utilizes frame-by-frame inspection alongside audio cues to determine the appropriateness of the content. Yet, this approach lacks scalability and becomes cumbersome with larger volumes of media. As demands increase, these methods face limitations that require innovation and advancement in moderation techniques.

Advanced Techniques for Visual Content Moderation

Advanced techniques in visual content moderation leverage cutting-edge technologies to enhance accuracy and efficiency. Machine learning algorithms play a pivotal role, enabling systems to learn from vast datasets. This adaptability allows for improved recognition of inappropriate or harmful content.

Another significant innovation is the use of computer vision. It processes images and videos at lightning speed, identifying elements that fall outside community guidelines. This technology can recognize context and nuance, going beyond simple keyword detection.

Natural language processing (NLP) further complements these methods by analyzing captions or descriptions associated with visuals. By understanding sentiment and intent, it helps filter out misleading or offensive material more effectively.

These advancements create a multi-layered approach to content moderation. They not only increase reliability but also reduce the manual workload on human moderators, allowing them to focus on more complex cases that require judgment calls.

Benefits and Challenges of Using Advanced Techniques

Advanced techniques in visual content moderation offer numerous benefits. They enhance accuracy, significantly reducing false positives and negatives. This precision ensures that only inappropriate content gets flagged while allowing legitimate uploads to flow freely.

Moreover, these methods can process large volumes of images and videos rapidly. Automation saves time for moderators who can focus on more nuanced cases requiring human judgment.

However, challenges exist as well. Advanced algorithms may struggle with context or cultural nuances. What is acceptable in one culture might be offensive in another.

Additionally, implementing such systems requires substantial investment in technology and training. Organizations must balance cost-effectiveness with the need for sophisticated tools to maintain a safe online environment.

There’s always a risk of over-reliance on automation, which can lead to oversight if not monitored correctly. Careful integration of advanced techniques into existing frameworks is vital for success.

Implementing a Visual Content Moderation System

Implementing a visual content moderation system requires careful planning and the right tools. Start by identifying your specific needs. Are you moderating user-generated images, videos, or both? Clear objectives will guide your approach.

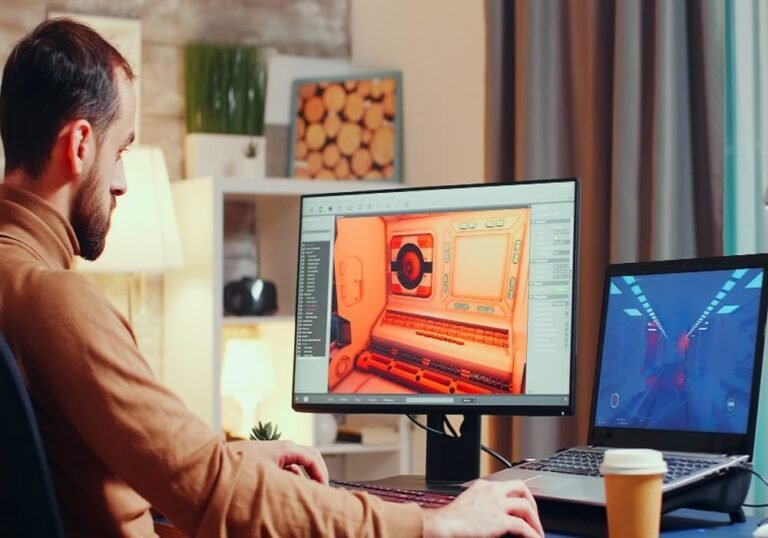

Next, choose between automated solutions or a combination of human oversight and AI technology. Automated tools can quickly filter out inappropriate content using machine learning algorithms that recognize harmful imagery.

Training is key for any team involved in moderation. Equip them with knowledge about community guidelines and the cultural context behind various content types. This understanding will enhance their ability to make nuanced decisions.

Integrate feedback loops into the system to continuously improve accuracy. Regular updates are essential as new trends emerge in visual content creation.

Monitor performance metrics closely to ensure effectiveness over time. Adjustments may be necessary based on these insights to maintain high standards of quality and relevance in your moderation efforts.

Conclusion

Visual content moderation is crucial for any platform that allows user-generated images and videos. As the digital landscape evolves, so do the techniques to ensure safe and appropriate content. Businesses need to stay ahead of trends in visual analysis to protect their brand reputation while fostering a positive user experience.

Advanced techniques offer innovative ways to enhance traditional strategies. From machine learning algorithms to AI-driven analysis, these methods provide greater accuracy and efficiency. While they come with benefits such as speed and scalability, challenges like algorithm bias must be addressed.

Implementing a robust visual content moderation system is vital for organizations seeking effective management of their online presence. By selecting the right tools and technologies, businesses can create a safer environment for users without compromising engagement or creativity.

Investing in comprehensive content moderation services not only safeguards your platform but also builds trust with users. Prioritizing this aspect of your operations ensures that you remain competitive in an ever-changing digital world, setting the stage for growth and longevity.